The ceaseless advance of technological innovation, especially AI, is reshaping our lifestyles, professional landscapes, and interactions with the world. As AI’s capabilities burgeon, the imperative to establish a robust governance framework becomes paramount. The impact of AI on society demands careful choreography covering innovation and legal and ethical considerations.

Picture the AI landscape as a grand ballroom, where the dancers are the myriad applications of AI technology—autonomous vehicles, medical diagnostics, virtual assistants, and beyond—each executing intricate moves that define the course of our technological future. Soft law, like a dance partner, offers an alluring invitation to a flexible and adaptable dance. It is a graceful sway to the rhythm of AI innovation, allowing the industry to pivot and twirl with the rapidly changing beats. On the opposite side of the dance floor stands the referee, a vigilant guardian overseeing the performance, ensuring that the dance adheres to a set of predetermined rules, providing a structured approach to governance that soft law might struggle to enforce – or does it?

As AI continues to develop and implications for society evolve, we are prompted to question the most effective dance partners in this complex performance:

Should we let soft law take the lead with its graceful adaptability, or is it time for the referee to step into the spotlight, providing structured and more stringently enforceable oversight to ensure responsible AI development?

Breaking down the race toward AI regulation

There is an unmistakable awareness that a race is underway toward effective regulation that can keep pace with the rapid strides of AI. Central to this race is the so-called ‘pacing problem’ or ‘Collingridge dilemma’ – which refers to the notion that technological innovation is increasingly outpacing the ability of laws and regulations to keep up. The need for governance has intensified alongside the advancements, prompting a global discourse on how to strike the delicate balance between nurturing innovation and safeguarding against potential risks.

This discourse is fuelled by several factors:

- The urgency of legal and ethical frameworks

With AI technologies permeating various aspects of our lives, from healthcare and finance to education and transportation, the urgency to establish ethical frameworks has become crucial. The race toward regulation is fueled by the recognition that without clear guidelines, the potential for unintended consequences and ethical breaches looms large.

- Global collaboration vs. fragmentation

The race for regulation is not only against time but also against the risk of fragmented regulation. AI knows no borders, and a patchwork of inconsistent regulations could hinder the potential for global collaboration. The challenge lies in creating regulatory frameworks that are robust enough to address universal ethical concerns while being adaptable to diverse cultural and technological landscapes.

- Public and private sectors in lockstep

While industry-led initiatives and self-regulation play a role, there is a growing realization that the refereeing on the dance floor must be a collaborative effort, with governments and regulatory bodies actively participating in shaping the rules. Striking a balance that leverages industry expertise while ensuring public accountability is a central challenge.

- Navigating the uncharted

Regulators find themselves navigating uncharted territory. The rapid evolution of AI often outpaces the ability to draft and implement regulations. Consequently, there is a push for regulatory frameworks that are adaptive, capable of evolving alongside technological advancements without sacrificing the core principles of ethics and accountability.

- The role of cross-industry dialogues

The race toward regulation is not a solo endeavor but a relay where insights from various industries are crucial. Cross-industry dialogues become a key component, fostering the exchange of best practices, lessons learned, and collaborative problem-solving. This collective effort aims to ensure that regulations are comprehensive, forward-looking, and effective.

Mapping fundamental AI regulatory developments

Not surprisingly, across the globe, regulatory frameworks are taking shape in response to rapid advancements in AI. The European Union (‘EU’) has been proactive in AI governance, addressing the risks generated by specific uses of AI through a set of complementary, proportionate and flexible rules which will also provide Europe with a leading role in setting the global gold standard.

Across the Atlantic Ocean, in the United States (‘US’), there has been a pervasive push to regulate AI technologies. A recent report from the Software Alliance revealed a remarkable surge in legislative efforts, with state legislators introducing nearly 200 bills focused on AI this year alone – a staggering 440% increase compared to the previous year – out of which 14 bills successfully transitioned into law. Furthermore, at the federal level, a continuous stream of bills has been proposed, each addressing specific risks associated with AI technology, including deepfakes in elections, deceptive practices and the potential for discrimination in employment processes. Moreover, President Biden’s recent Executive Order emphasizes AI safeguards, and – back to Europe – the UK’s AI Safety Summit at Bletchley Park marked – as it has been called – a “diplomatic breakthrough”.

This all prompts the conclusion that the global regulatory legislative landscape reflects a concerted effort to grapple with the multifaceted challenges posed by AI, showcasing a commitment to proactive governance and responsible innovation.

Soft law’s dance or referee’s watch?

Going back to the challenges we are facing – fast-paced technological advancement and the consequent (potentially) far-reaching implications for individuals and society at large – the real question we are prompted to ask is:

What should policymakers do in light of these new challenges?

Embracing extremes does not seem to offer a viable solution. Despite ongoing efforts toward regulating the AI landscape through hard law measures, some industry experts resist the idea of imposing legally binding obligations on AI. They hold that lawmakers and regulators cannot merely reinforce the technocratic regulatory approaches and command-and-control tactics of the past. In a world where seizing opportunities for “innovation arbitrage” is more accessible than ever, oppressive crackdowns on emerging technologies often yield unintended consequences, potentially stifling innovation that drives human betterment at a jurisdictional or even broader level and further complicating the governance scene. Instead, they advocate for an “all hands on deck” approach which moves away from the old, inflexible top-down approaches and which hinges on the utilization of “soft law” principles to help fill the governance gap as the pacing problem accelerates. This entails relying on multi-stakeholder processes, guidelines, workshops, self-certification, and best practices and standards to comprehensively regulate the swiftly evolving AI landscape. Currently, many sectors have already taken on soft law as the way forward for modern technological governance – such as driverless cars, drones, the Internet of Things, mobile medical applications, artificial intelligence, and others-, especially in the US.

Still, doubts persist regarding the efficacy of soft law in the realm of AI. Some argue for the necessity of hard law as a safeguard for individuals, decrying soft law as either being too lax (and open to private abuse) or too informal (and open to government abuse). In all truth, examining the conduct of tech giants in recent years, it becomes challenging to assert that we can or should rely on them for self-regulation. In addition, others have emphasized that the endeavors towards self-regulation in recent years have been characterized as “difficult and eventually ineffective”.

Despite differing opinions and arguments, what is clear when zooming in on the governance challenges in the real of AI, is that a new governance vision for the technological age is required if we are to “tip the balance in favor of humanity”, as UK prime minister Rishi Sunak emphasized during his closing press conference at the Bletchley Park AI Safety Summit. Bearing this in mind, we will have to first take a step back and ask ourselves tough questions about the exact problems we are aiming to solve and what governance mechanisms we want to push forward as the lead dancer in the process towards responsible and effective AI governance.

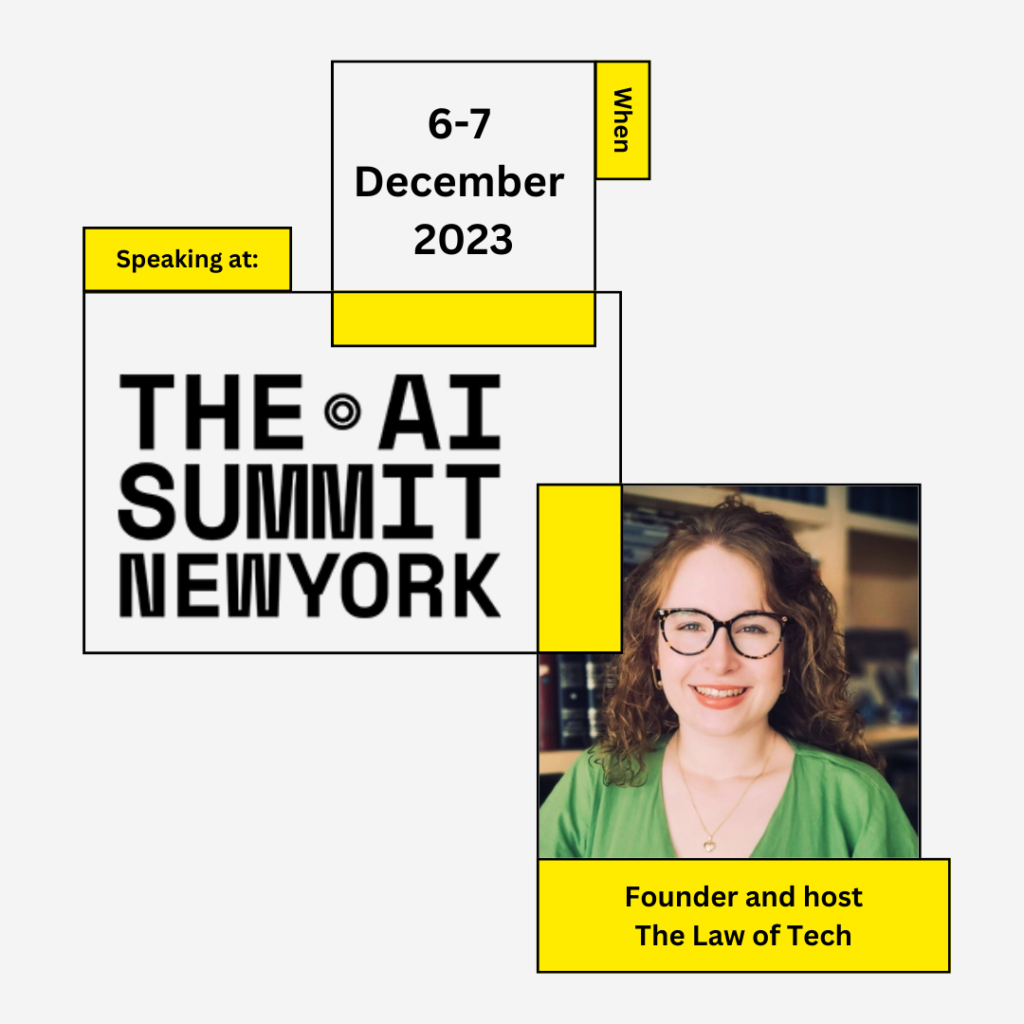

Let’s continue the conversation together: Join us at the 2023 NY AI Summit

We are ready to continue this conversation soon at the 2023 AI Summit in New York on the 6th of December 2023 as the moderators of the panel titled “AI-powered World: Key Considerations Around AI Policy Before It Is in Full Force”. For this panel, we are joined by an incredible lineup of speakers including Var Shankar (Executive Director , Responsible AI Institute), Patrice Ettinger (Vice President, Chief Privacy Officer, Pfizer), Magdalena Konig (Representative from ADNOC Legal, Governance & Compliance), and Michelle Rosen (General Counsel, Collibra).

During our panel session, we will be addressing some of the key questions and concerns to be faced in the age of AI, including:

- What are the most pressing AI policy issues?

- Do we need AI regulation, and if so, will it curb innovation? Can industry be left to self-regulate?

- What are key parameters to responsible, ethical, and sustainable AI?

If you would like to join our session, register here for the conference and feel free to send us a message to continue the conversation.

And in case you missed out on our panel discussion at the 2023 London AI Summit, check out our blog post to get a feel of what is yet to come soon!

Support. Empower. Connect. This is The Law of Tech.